Layering Cake – Neural Networks

Whether you are making a simple spam filter or training a deep model to drive a car, understanding the basics of feed-forward neural networks gives you a strong foundation. They may seem complicated at first, but at their core, they are just layers of math functions learning to recognize patterns – transforming raw data into meaningful predictions through repeated refinement.

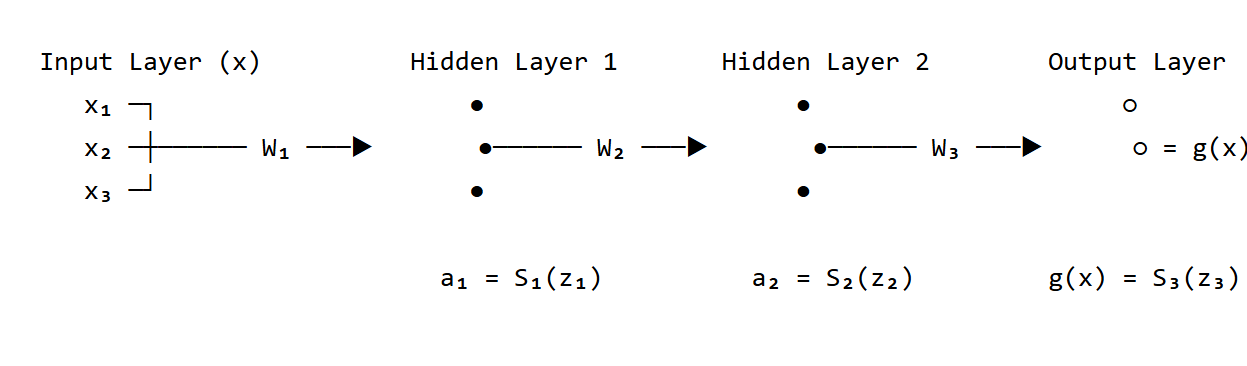

Each dot (● or ○) is a neuron.

Arrows represent weights (connections)

Activations (like ReLU) happen at the dots

Output is a combo of the last layer’s neurons

Imagine a neural network as a layered structure made up of neurons (also known as nodes). These neurons are organized in layers, with each layer serving a unique role:

Input Layer: This is where everything starts. Each neuron in this layer corresponds to a feature in your input data – say, pixel values in an image or word frequencies in a sentence.

Hidden Layers: These are the heart of the network. Each neuron in a hidden layer takes inputs from the previous layer, multiplies them by weights (which determine the importance of each input), adds a bias term, and then passes the result through an activation function like ReLU (Rectified Linear Unit) or sigmoid. This activation introduces non-linearity, allowing the network to learn complex patterns.

Output Layer: Finally, the last layer combines all the transformations from previous layers to produce the final output. This could be a single value (like a house price prediction) or a probability distribution over categories (like identifying if an image shows a cat, dog, or car).

Forward and Only Forward

One defining feature of a feed-forward neural network is that data moves in only one direction – forward. There’s no looping back or feedback. Each layer feeds its output to the next, step by step, until the final prediction is made. This is why it’s called “feed-forward.”

Now It’s Time to Bake a Cake!

Polished through repetition

Inputs: Dog-safe ingredients

Hidden layers: Mixing, baking steps (transformation)

Weights/activations: Recipe rules that control the process

Output: Finished dog cake, ready to eat

The ingredients (like peanut butter, oats, pumpkin puree – dog-safe ingredients) are like the input layer. Each ingredient represents a piece of information you start with – the raw data.

The steps you take – mixing the ingredients, blending them, baking them – are like the hidden layers of the neural network.

Each instruction – how much of each ingredient, how long to bake – is like the weights and activation functions

At the end, out comes a beautiful, dog-friendly cake – your output. The network has taken raw inputs and processed them step by step into something useful and delicious (well, to a dog).

Other network examples?

CNN – Convolutional Neural Network

Uses convolutional layers to automatically detect features (e.g., edges, textures, shapes).

Filters slide across the input image to extract local features.

Followed by pooling layers to reduce dimensionality and highlight important information.

RNN – Recurrent Neural Network

Maintains a “memory” of previous inputs using loops in the network.

Good for recognizing patterns over time (e.g., audio or text).

GAN – Generative Adversarial Network

Has two components:

Generator: Tries to create fake data that looks real.

Discriminator: Tries to distinguish between real and fake.

Both learn by competing with each other, improving over time.

NLP – Natural Language Processing

Combines deep learning with linguistic rules.

Tasks include tokenization, translation, sentiment analysis, and more.

Uses models like Transformers (e.g., BERT, GPT).

Want to try out examples? Visit Yun.Bun I/O.

Leave a comment